Some years ago I posted about the difference between Archiving and Saving. In the world of archiving and records management the terms are distinct. Archiving is designating items or content for long-term preservation. Typically, defined criteria helps with the selection. In essence, there’s a process. It’s not just randomly saving everything because of seemingly unlimited digital storage space.

However, since I changed jobs to work on the dark side in data, another nuance developed. I frequently find myself having to define storage, archiving, and deleting. Then I need to translate what each term means from a data perspective or a records management/archival one. In essence, archiving in the data world means storing data on cheaper disk space. This means it may take slightly longer to access. In some scenarios, the data will be retained for a long time. Sometimes it could be for large volumes of data that will only be needed for a couple of years. In any event, it differs from the archival definition of the term.

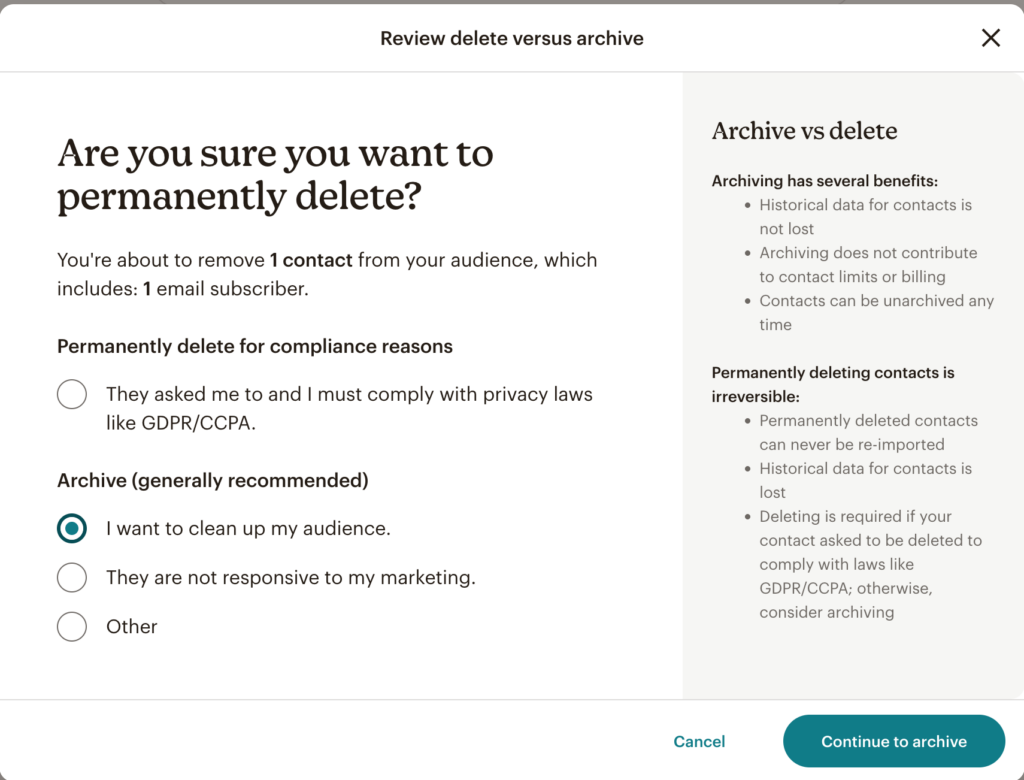

Yet, in many circumstances, I find the definition getting blurry between the two. For example, when I had to migrate my list of subscription users from one service to another. I took the opportunity to clean up and remove invalid email addresses. To me, this was an obvious delete. I had no use to keep outdated email addresses. When I hit the delete key, the system gave me a prompt questioning my decision and requiring me to provide a reason. Even more puzzling was that my answer belonged with archiving!

It felt curious to me that permanently deleting wasn’t an option for cleaning up my audience. To me, this is building bad habits in people. Digital storage, and digital archive space, isn’t limitless. It’s not a good practice to save everything simply because the space is available without having a valid reason. When would I ever need to refer to an invalid email address? How would this information be valuable to me? Why does “permanently delete” only happen due to a compliance reason?

The point is, we shouldn’t be conditioned to choose “archive” instead of opting for delete when it makes sense. It’s healthy to get rid of things that no longer have value to us.